Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

→ Article about extracting text from pages, online documents, and images using OpenCV and Pytesseract in Python.

There are different solutions for extracting text from images like Google Lens, ChatGPT, or other AI models. Actually, they are really fast and accurate, but of course, they have some restrictions. Some of them want you to buy a premium membership, some have usage limitations, and so on. In this article, I will show you how to extract text from images using OpenCV and Pytesseract in Python. You dont need to buy anything or register to a website, just write code D:

You probably know what OCR is, but if you don’t, it is mainly about extracting text from anything that comes to your mind—pages, signs, license plates, online documents, etc. By the way, OCR stands for Optical Character Recognition.

By using OCR, you can create limitless applications. For instance, you can create a text translator, a document summarizer, a document fraud detector, and more. If you want some project ideas, what I recommend to you is: extract text and use it with various Deep Learning models.

There are ready-to-use solutions; you can directly use one of them for extracting text:

Okay, enough talking, let’s write some code

→ Process an image with OpenCV, extract text with Pytesseract, and save the extracted text to a txt file.

You can install pytesseract with pip, as shown below:

pip install pytesseract

There is one additional step, you need to install the Tesseract OCR executable. You can download the executable from here; it won’t take more than 5 minutes to install completely.

Don’t forget to change pytesseract.pytesseract.tesseract_cmd to your executable’s path.

# Import libraries

import cv2

import pytesseract

import matplotlib.pyplot as plt

# Mention the installed location of Tesseract-OCR in your system

pytesseract.pytesseract.tesseract_cmd = r'C:\Program Files\Tesseract-OCR\tesseract.exe'

# Read the image from which text needs to be extracted

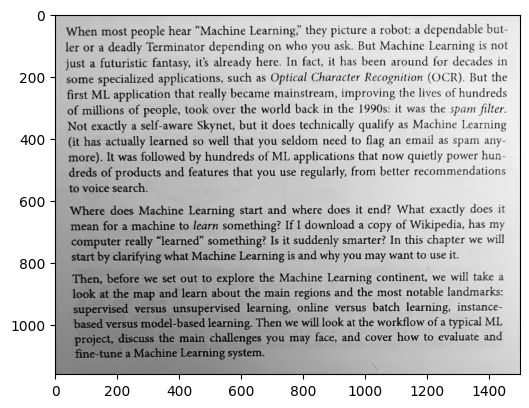

img = cv2.imread("resources/text_images/paragraph4.jpeg")

# Convert the image to the grayscale

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# visualize the grayscale image

plt.imshow(gray,cmap="gray")

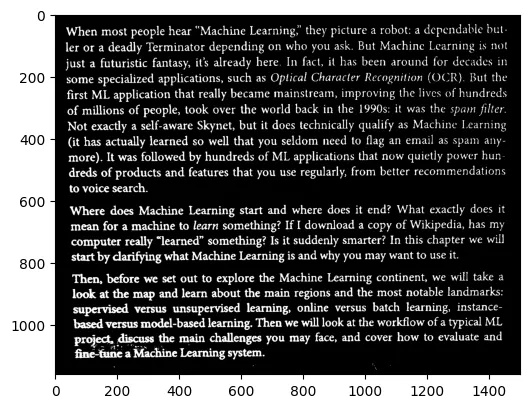

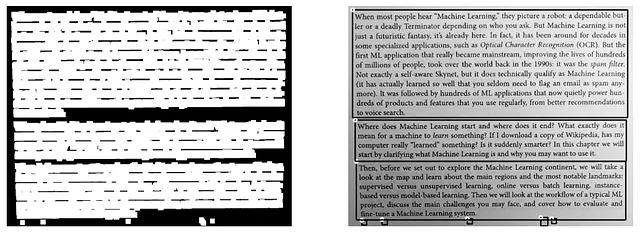

Use the threshold() function. OTSU threshold directly creates a binary image without any predefined intensity value.

# Performing OTSU threshold

ret, thresh1 = cv2.threshold(gray, 0, 255, cv2.THRESH_OTSU | cv2.THRESH_BINARY_INV)

# visualize the thresholded image

plt.imshow(thresh1)

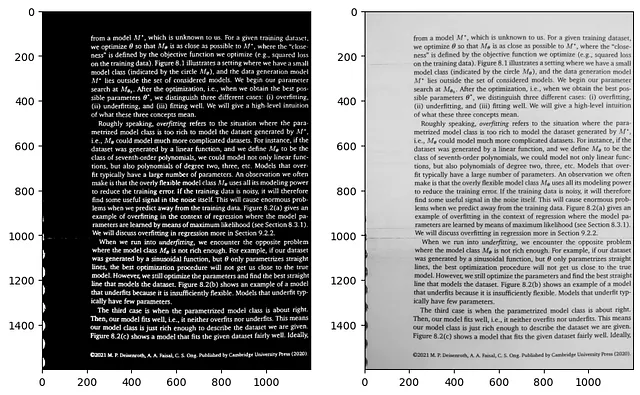

Binary Image → Dilation → Find Contours → Extract Text using image_to_string function

# dilation parameter , bigger means less rect

rect_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (25, 25))

# Applying dilation on the threshold image

dilation = cv2.dilate(thresh1, rect_kernel, iterations = 1)

# Finding contours

contours, hierarchy = cv2.findContours(dilation, cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_NONE)

# Creating a copy of the image, you can use a binary image as well

im2 = gray.copy()

cnt_list=[]

for cnt in contours:

x, y, w, h = cv2.boundingRect(cnt)

# Drawing a rectangle on the copied image

rect = cv2.rectangle(im2, (x, y), (x + w, y + h), (0, 255, 0), 2)

cv2.circle(im2,(x,y),8,(255,255,0),8)

# Cropping the text block for giving input to OCR

cropped = im2[y:y + h, x:x + w]

# Apply OCR on the cropped image

text = pytesseract.image_to_string(cropped)

cnt_list.append([x,y,text])

# This list sorts text with respect to their coordinates, in this way texts are in order from top to down

sorted_list = sorted(cnt_list, key=lambda x: x[1])

# A text file is created

file = open("recognized2.txt", "w+")

file.write("")

file.close()

for x,y,text in sorted_list:

# Open the file in append mode

file = open("recognized2.txt", "a")

# Appending the text into the file

file.write(text)

file.write("\n")

# Close the file

file.close()