Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

OpenCV provides a great number of image processing functions. By using these functions, a lot of information can be extracted from images. The best way to understand how these functions work and when to use which function is by using these functions in sequence on a problem that you want to solve. In my case, I have always wanted to create a system for generating FEN format (chessboard images with pieces on them, just like chess.com and lichess.com) from chessboard images.

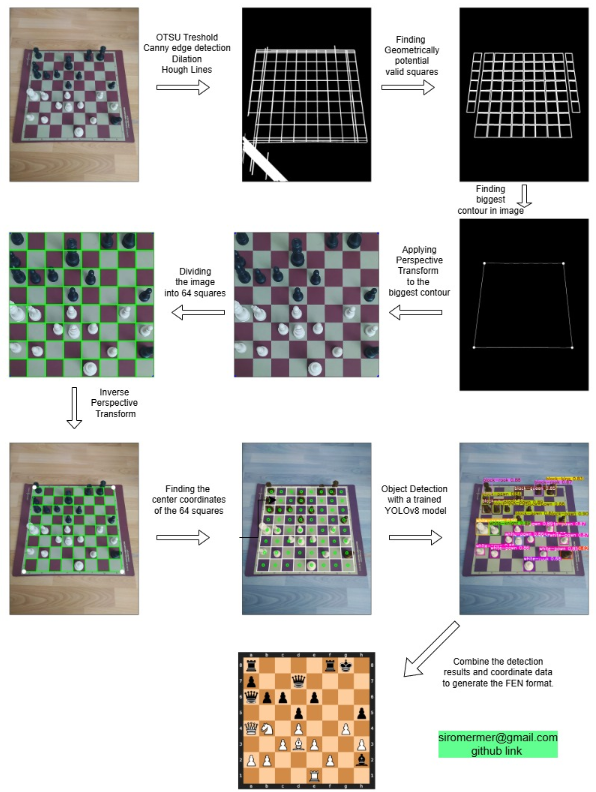

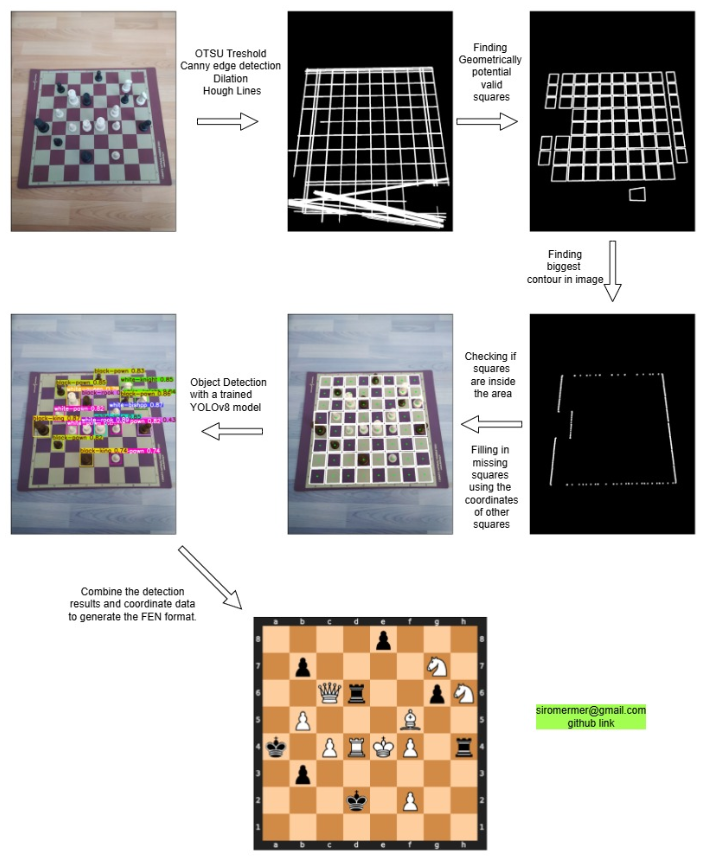

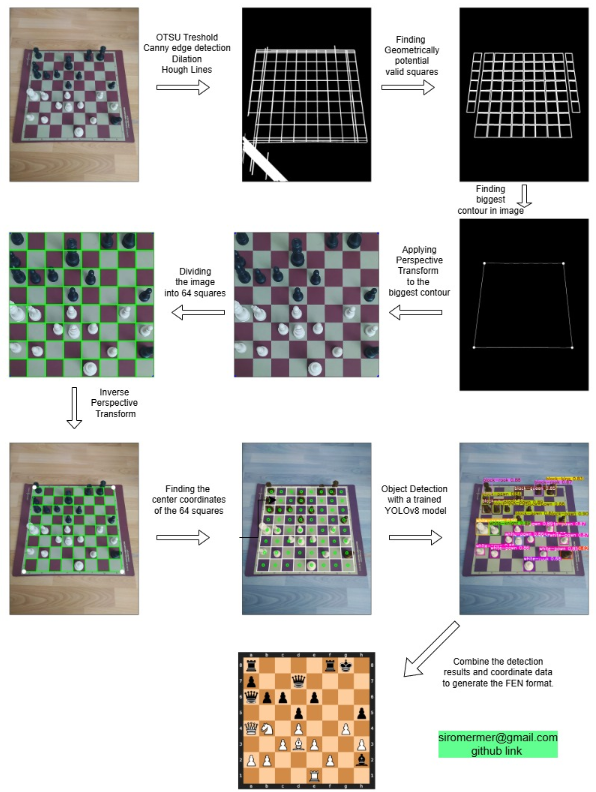

In this article, I will show you 2 different approaches for solving this problem. Both approaches first use classical computer vision methods for finding chessboard and square coordinates, and then use a YOLO object detection model to detect pieces, and finally combine these to generate a FEN format.

I am not going to share code here because it is too long for a blog post. I will explain how these two approaches work. If you want to see all the code, model, and documentation, you can check the GitHub repository. Both Python and C++ implementations are available inside the repository.

https://github.com/siromermer/Dynamic-Chess-Board-Piece-Extraction

Actually, I have 2 charts, and I don’t think it can be explained better with words, but anyway, I will try 😀.

As I said before, there are two different implementations:

Every chessboard has 8 rows and 8 columns, and this is an important pattern. This information can be used for filling in missing squares. Sometimes some squares are not accurately detected because of:

You can see the whole process in the image below: different filters are applied to detect the 64 squares, then the YOLO model is used to detect pieces, and finally, these two are combined to generate FEN.

The square-filling algorithm works better with non-angled (straight) images.

The first steps are the same as the square-filling algorithm, but the difference is that after finding the biggest contour in an image, a perspective transformation is applied. If you have no idea about what is perspective transformation, you can read my article.

Perspective transformation works better with images taken from different angles.

You can find the full code, documentation, and model files at this GitHub link.