Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

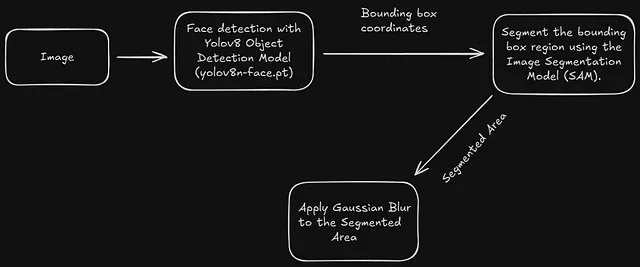

→ Detect faces using a pretrained YOLO model, segment the detected regions with the SAM segmentation model, and apply Gaussian blur to the segmented areas.

I like the idea of combining YOLO models with promptable segmentation models like SAM because, by using pretrained YOLO models and promptable SAM models, you can detect and segment objects. You don’t need to train any model, and you don’t need any dataset. SAM doesn’t give label output by its nature, but YOLO does. So, by combining these two models, first we will detect faces, then we will segment the detected parts, and finally, we will blur faces on an image.

You don’t have to limit yourself to blurring faces. By combining YOLO models with SAM models, you can create different applications. After detection with YOLO, you have coordinates and label information, and by giving coordinate information to the SAM model as input, segmentation is automatically done.

The steps are quite easy to implement and understand. Here are three main steps:

Look at the chart, it explains exactly what we are going to build.

As I mentioned before, there are many pretrained models available for your specific tasks. You can find various object detection models from Roboflow or Kaggle, most of the time you can find a model from this two websites.

If you can’t find a model that fits your purpose, or if you prefer training your own model, you can check my article about how to train YOLO object detection models with a custom dataset.

I will use yolov8n-face.pt model(link) for face detection. This model is already trained and ready to use for face detection tasks, you can download this and continue.

SAM doesn’t work like usual segmentation models like Mask R-CNN. Typical workflow of a training and deploying an image segmentation model is collecting a dataset, annotating it, and then training a model for segmentation. After that, you can perform segmentation on images and videos.

SAM is a promptable segmentation system with zero-shot generalization to unfamiliar objects and images, without the need for additional training.

(source: SAM website)

It is pretty cool, you dont have to train anything, you only need to give some position input to the SAM model, and this input must be some kind of positional data. There are 3 kind of input type:

Since YOLO model gives output as bounding box coordinates, I will use second input type.

You can download the SAM model from this link. If you don’t have a GPU-supported PyTorch environment, I recommend downloading the base model(vit_b) instead of the big one(vit_h).

Gaussian Blur takes the average of nearby pixels with weights according to the Gaussian distribution. There are different parameters like filter size and standard deviation. These parameters will affect how strong the blurring will be. You can adjust this parameters to increase or decrease the effect of blurring.

If you increase the filter size and deviation, the amount of blurring will increase.

Okay, enough talking about models, lets start coding.

I tried to explain everything with comment lines, I hope there won’t be any problems.

%pip install git+https://github.com/facebookresearch/segment-anything.git

%pip install opencv-python ultralytics numpy torch matplotlib

import cv2

import matplotlib.pyplot as plt

import torch

import numpy as np

from ultralytics import YOLO

from segment_anything import sam_model_registry, SamPredictor

Don’t forget to change sam_checkpoint

# Load pretrained YOLOv8 face detection model

model = YOLO("yolov11n-face.pt")

# Load SAM segmentation model

sam_checkpoint = r"sam_vit_b_01ec64.pth" # change this to your path

"""

vit_b: SAM base model

vit_h: SAM huge model

"""

model_type = "vit_b"

# check if CUDA is available and set device accordingly

device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"Using device: {device}")

# load SAM model to the GPU

sam = sam_model_registry[model_type](checkpoint=sam_checkpoint)

sam.to(device=device)

# Create SAM predictor instance

predictor = SamPredictor(sam)

Don’t forget to change image_path

# Load the image

image_path = "images/friends1.jpg" # Change this to your image file

image = cv2.imread(image_path)

# Run YOLO face detection model on full image

results = model(image)[0]

# Loop over each detected face and segment it

for box in results.boxes.xyxy:

predictor.set_image(image)

x1, y1, x2, y2 = map(int, box)

input_box = np.array([[x1, y1, x2, y2]])

# Run SAM segmentation model

masks, _, _ = predictor.predict(box=input_box, multimask_output=False)

# Create the mask for the segmented face

mask = masks[0].astype(np.uint8) * 255

# Blur the face region (Gaussian blur)

face_region = cv2.bitwise_and(image, image, mask=mask)

blurred_face = cv2.GaussianBlur(face_region, (7, 7), 0)

# Replace the original face region with the blurred face

face_removed_image = cv2.bitwise_and(image, image, mask=cv2.bitwise_not(mask)) # Keep the non-masked areas

image = cv2.add(face_removed_image, blurred_face) # Add the blurred face to the original image

# show result

plt.figure(figsize=(15, 8))

plt.xticks([]), plt.yticks([])

plt.imshow(cv2.cvtColor(image,cv2.COLOR_BGR2RGB))

I have an another article about combining YOLO object detection and SAM segmentation models, you can read it.

Okay, that’s it from me. I hope you liked it, and if you have any questions, feel free to ask me (siromermer@gmail.com)