Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

→ Article about tracking moving objects with background subtractors in OpenCV, implemented in python and c++.

Close your eyes and just imagine a normal day and the sky above you. There are clouds, maybe the sun, maybe the moon. Sometimes you see moving objects like a plane, helicopter, and so on. So, except for moving objects, every other part of the sky is not going anywhere, or they are moving very slowly so we don’t even notice. We can use this as an advantage for tracking objects; by comparing each frame with the previous one in a video, we can track objects. Because all the other elements (clouds, sun, moon) will not be moving, only the target object (plane, helicopter , etc.) will move.

It is worth mentioning that this method is not a good choice for every environment. If the background of a scene is not constant, there will be many false boxes. I recommend testing this method in environments like the sky or a room where objects are not moving except for the target object.

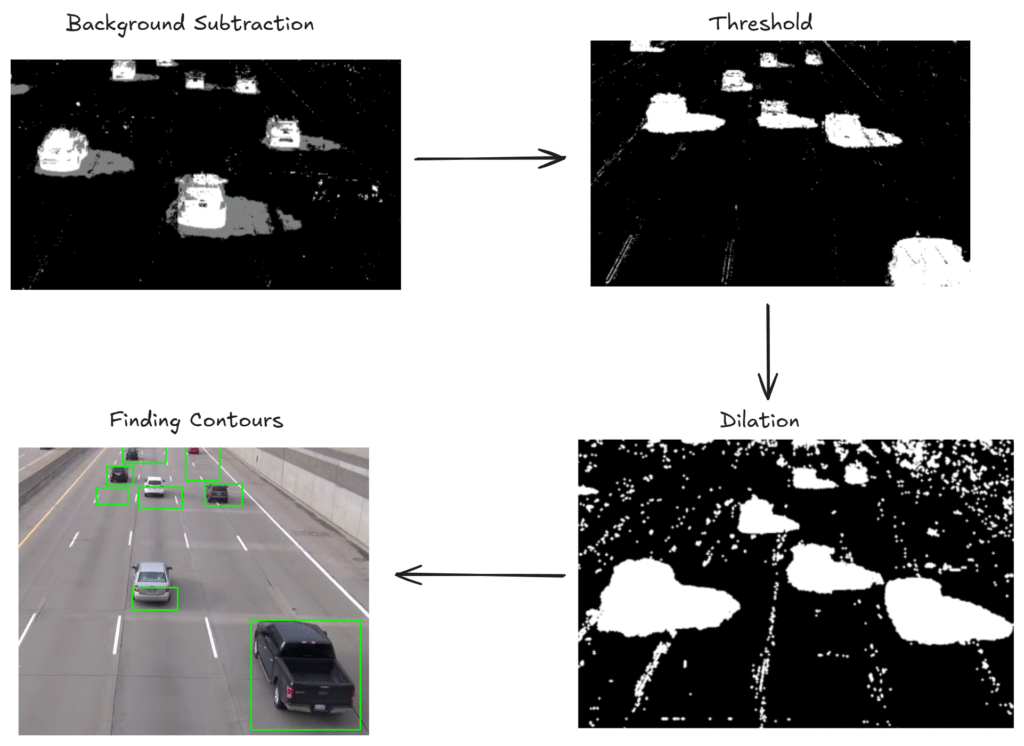

You can see the main logic from the chart below.

There are two different background subtractors in OpenCV:

These two algorithms do not only compare consecutive frames; they have different features. By using past information from frames, they create a background over time and use that background information with the present frame to find out moving parts of the image

If you really want to learn how background subtractors work, you can read the official documentation here.

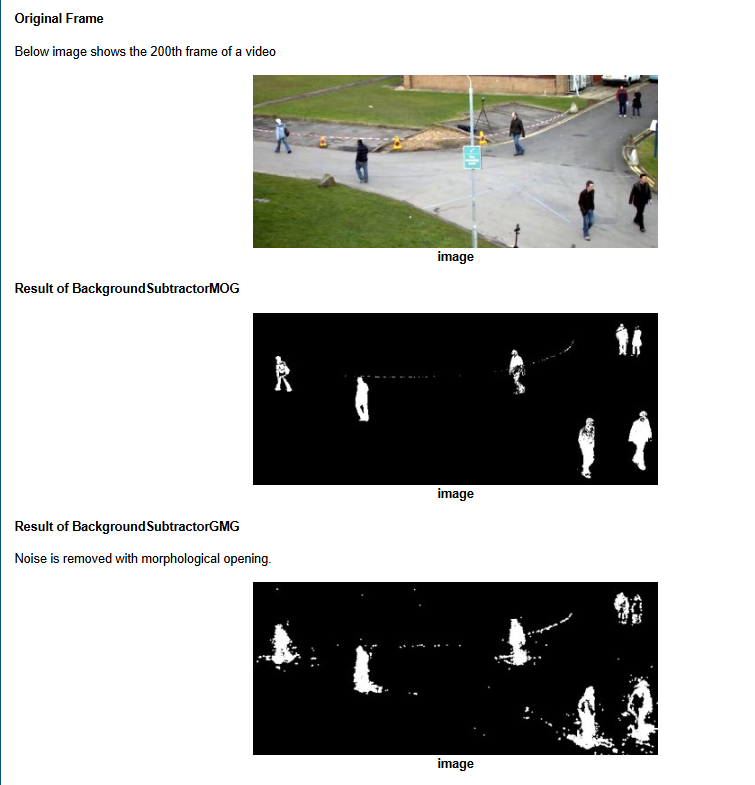

Look at the example below, I found this image from the OpenCV documentation.

I added comment lines for every process; you can read these. What I recommend is that you copy this code and see how images are transformed by using the cv2.imshow function after each operation.

Python Implementation

# import libraries

import cv2

import numpy as np

# KNN

KNN_subtractor = cv2.createBackgroundSubtractorKNN(detectShadows = True) # detectShadows=True : exclude shadow areas from the objects you detected

# MOG2

MOG2_subtractor = cv2.createBackgroundSubtractorMOG2(detectShadows = True) # exclude shadow areas from the objects you detected

# choose your subtractor

bg_subtractor=MOG2_subtractor

camera = cv2.VideoCapture("resources/run.mp4")

while True:

ret, frame = camera.read()

# Every frame is used both for calculating the foreground mask and for updating the background.

foreground_mask = bg_subtractor.apply(frame)

# threshold if it is bigger than 240 pixel is equal to 255 if smaller pixel is equal to 0

# create binary image , it contains only white and black pixels

ret , treshold = cv2.threshold(foreground_mask.copy(), 120, 255,cv2.THRESH_BINARY)

# dilation expands or thickens regions of interest in an image.

dilated = cv2.dilate(treshold,cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (3,3)),iterations = 2)

# find contours

contours, hier = cv2.findContours(dilated,cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# check every contour if are exceed certain value draw bounding boxes

for contour in contours:

# if area exceed certain value then draw bounding boxes

if cv2.contourArea(contour) > 50:

(x,y,w,h) = cv2.boundingRect(contour)

cv2.rectangle(frame, (x,y), (x+w, y+h), (255, 255, 0), 2)

cv2.imshow("Subtractor", foreground_mask)

cv2.imshow("threshold", treshold)

cv2.imshow("detection", frame)

if cv2.waitKey(30) & 0xff == 27:

break

camera.release()

cv2.destroyAllWindows()

c++ Implementation

#include <opencv2/opencv.hpp>

#include <iostream>

// path to video

std::string video_path="../../videos/street.mp4";

// Create KNN background subtractor

cv::Ptr<cv::BackgroundSubtractor> KNN_subtractor = cv::createBackgroundSubtractorKNN(true);

// Create MOG2 background subtractor

cv::Ptr<cv::BackgroundSubtractor> MOG2_subtractor = cv::createBackgroundSubtractorMOG2(true);

int main() {

// Choose your subtractor (here using MOG2)

cv::Ptr<cv::BackgroundSubtractor> bg_subtractor = MOG2_subtractor;

// Open the video file

cv::VideoCapture video(video_path);

cv::Mat frame, foreground_mask, threshold_img, dilated;

while (true) {

bool ret = video.read(frame);

if (!ret) {

std::cout << "End of video." << std::endl;

break;

}

// Apply the background subtractor to get the foreground mask

bg_subtractor->apply(frame, foreground_mask);

// Apply threshold to create a binary image

cv::threshold(foreground_mask, threshold_img, 120, 255, cv::THRESH_BINARY);

// Dilate the threshold image to thicken the regions of interest

cv::dilate(threshold_img, dilated, cv::getStructuringElement(cv::MORPH_ELLIPSE, cv::Size(3, 3)), cv::Point(-1, -1), 1);

// Find contours in the dilated image

std::vector<std::vector<cv::Point>> contours;

cv::findContours(dilated, contours, cv::RETR_EXTERNAL, cv::CHAIN_APPROX_SIMPLE);

// Draw bounding boxes for contours that exceed a certain area threshold

for (size_t i = 0; i < contours.size(); i++) {

if (cv::contourArea(contours[i]) > 150) {

cv::Rect bounding_box = cv::boundingRect(contours[i]);

cv::rectangle(frame, bounding_box, cv::Scalar(255, 255, 0), 2);

}

}

// Show the different outputs

cv::imshow("Subtractor", foreground_mask);

cv::imshow("Threshold", threshold_img);

cv::imshow("Detection", frame);

// Exit when 'ESC' is pressed

if (cv::waitKey(30) == 27) break;

}

video.release();

cv::destroyAllWindows();

return 0;

}